Bridging the modality gap in multimodal eye disease screening: learning modality shared-specific features via multi-level regularization

Jiayue Zhao*, Shiman Li, Yi Hao, Chenxi Zhang†

IEEE Signal Processing Letters (IF:3.2)

Abstract

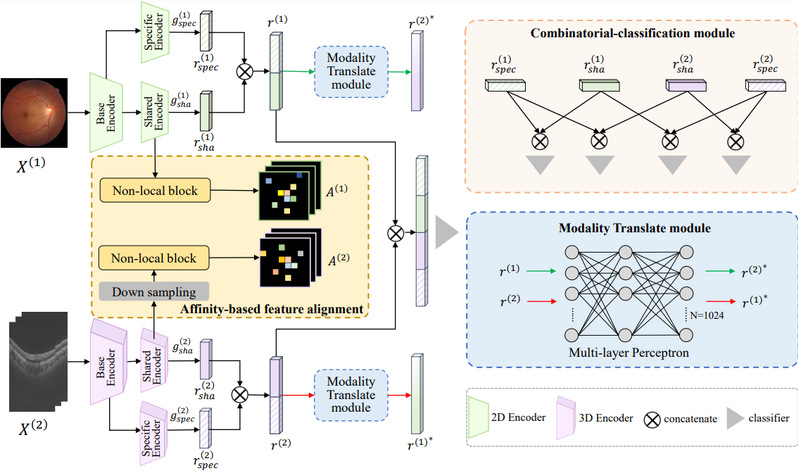

Color fundus photography (CFP) and optical coherence tomography (OCT) are two common modalities used in eye disease screening, providing crucial complementary information for the diagnosis of eye diseases. However, existing multimodal learning methods cannot fully leverage the information from each modality due to the large dimensional and semantic gap between 2D CFP and 3D OCT images, leading to suboptimal classification performance. To bridge the modality gap and fully exploit the information from each modality, we propose a novel feature disentanglement method that decomposes features into modality-shared and modality-specific components. We design a multi-level regularization strategy including intra-modality, inter-modality, and intra-inter-modality regularization to facilitate the effective learning of the modality Shared-Specific features. Our method achieves state-of-the-art performance on two eye disease diagnosis tasks using two publicly available datasets. Our method promises to serve as a useful tool for multimodal eye disease diagnosis. The source code is publicly available at https://github.com/yuerjiar/ShaSpec.