MMSleepGNet: Mixed Multi-Branch Sequential Fusion Model Based on Graph Convolutional Network for Automatic Sleep Staging

Xinrong Chen, Yufang Zhao, Shu Shen, Xiao Chen

IEEE Transactions on Instrumentation and Measurement (IF = 5.6)

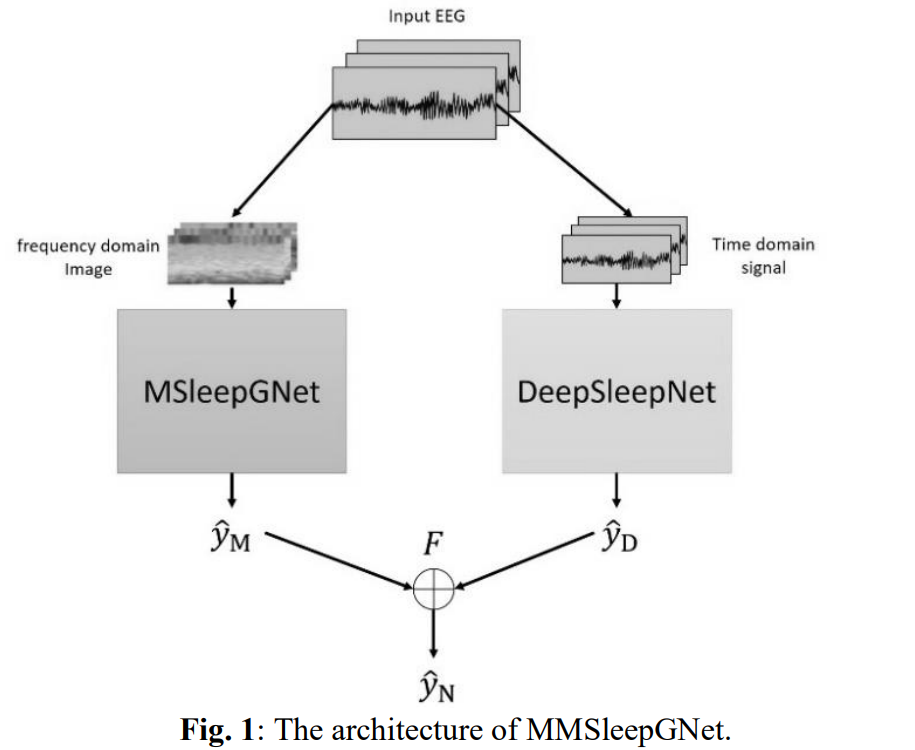

Automatic sleep staging is critical to expanding sleep assessment and diagnosis and can serve millions of people experiencing sleep deprivation and sleep disorders. However, it is difficult to extract features from multiple EEG epochs and classify all sleep stages accurately and automatically. Simultaneously, how to fuse these features is a crucial technology in automatic sleep staging. In this paper, we propose a novel sequence-to-sequence sleep staging model named MMSleepGNet, which is capable of learning a joint representation from both raw signals and time-frequency images. The proposed model abandons traditional fusion methods and employs the confidence interval of sleep stage probabilities to form the appropriate fusion strategy. The deviation caused by different center points is avoided. In the view of time-frequency image, a novel multi-branch strategy is employed at the sequence processing level. On the main branch, Graph Convolutional Network is added to mine the connections between EEG epochs to achieve faster convergence during the training procedure and more accurate classification results with fewer training times. Experimental results on two public sleep datasets, named Sleep-EDF-20 and Sleep-EDF-78, showed that the proposed multi-branch strategy based on time-frequency image displayed the good performance and achieved better overall accuracy on these two datasets. Furthermore, the fusion method based on the characteristics of frequency domain and time domain achieved an overall accuracy about 88.4% on Sleep-EDF-20 (±30mins) and 83.8% on Sleep-EDF-78 (±30mins), outperformed the state-of-the-art methods in the above datasets. This study will possibly allow us to have a precise automatic sleep staging method that has the potential to replace manual sleep staging.