Boosting Point-BERT by Multi-choice Tokens

Kexue Fu* , Mingzhi Yuan* , Shaolei Liu, Manning Wang†

IEEE Transactions on Circuits and Systems for Video Technology (IF=5.859)

Abstract

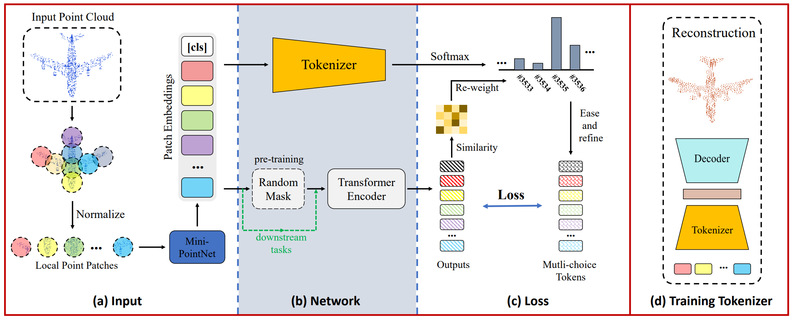

Masked language modeling (MLM) has become one of the most successful self-supervised pre-training task. Inspired by its success, Point-BERT, as a pioneer work in point cloud, proposed masked point modeling (MPM) to pre-train point transformer on large scale unanotated dataset. Despite its great performance, we find the inherent difference between language and point cloud tends to cause ambiguous tokenization for point cloud, and no gold standard is available for point cloud tokenization. Point-BERT uses a discrete Variational AutoEncoder (dVAE) as tokenizer, but it might generate different token ids for semantically-similar patches and the same token ids for semantically-dissimilar patches. To tackle the above problems, we propose our McP-BERT, a pre-training framework with multi-choice tokens. Specifically, we ease the previous single-choice constraint on patch token ids in Point-BERT, and provide multi-choice token ids for each patch as supervision. Moreover, we utilitze the high-level semantics learned by transformer to further refine our supervision signals. Extensive experiments on point cloud classification, few-shot classification and part segmentation tasks demonstrate the superiority of our method, e.g., the pre-trained transformer achieves 94.1% accuracy on ModelNet40, 84.28% accuracy on the hardest setting of ScanObjectNN and new state-of-the-art performance on few-shot learning. Our method improves the performance of Point-BERT on all down-stream tasks without extra computational overhead.