Wavelet-based Self-supervised Learning for Multi-scene Image Fusion

Shaolei Liu*, Linhao Qu*, Qin Qiao†, Manning Wang†, Zhijian Song†

Neural Computing and Applications (2022, IF=5.606)

Abstract

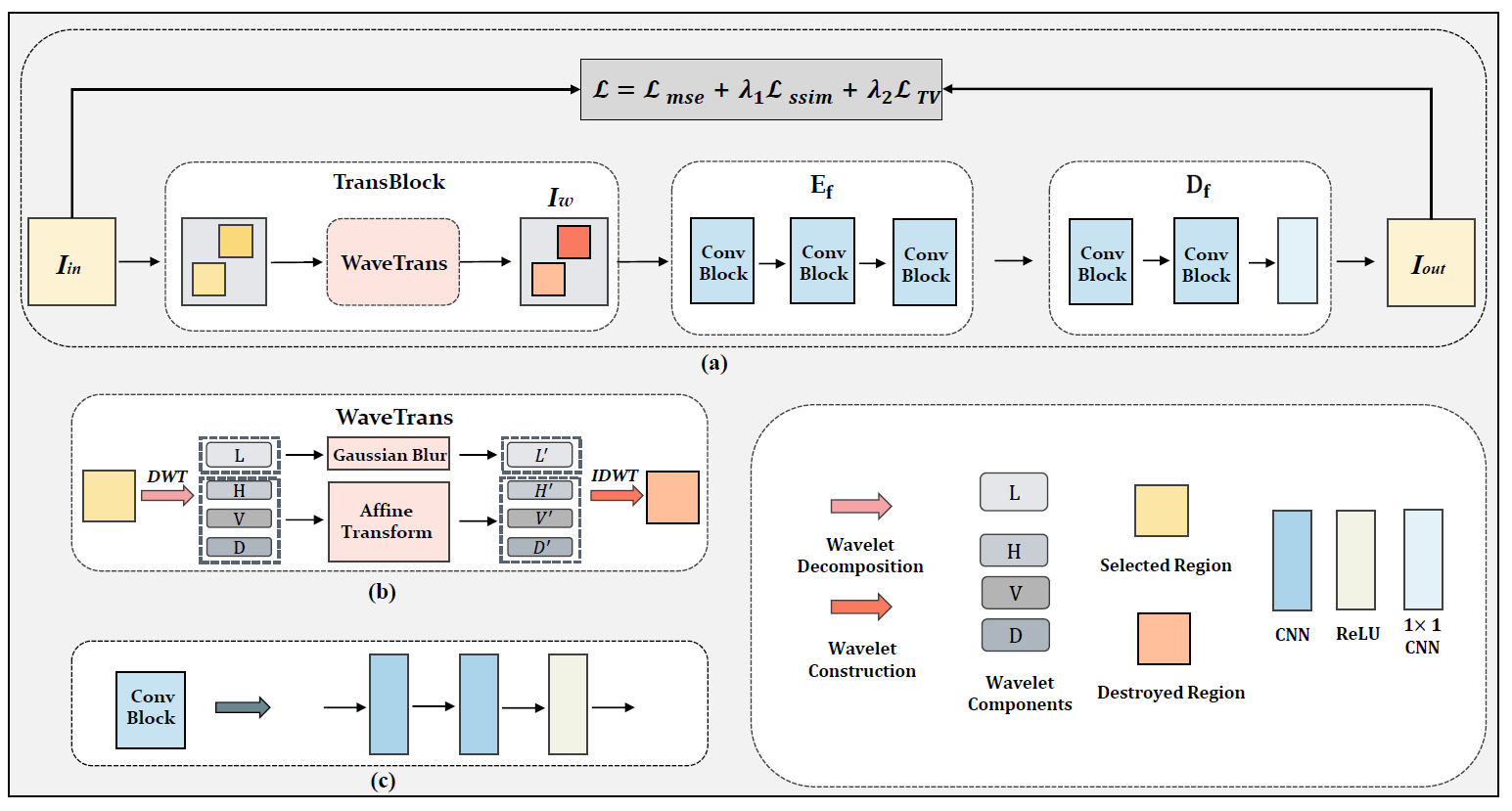

Image fusion can provide more comprehensive information by integrating images from different sensors. So far, intensive research has been devoted to utilizing deep learning to fuse images from multiple sensors more effectively. However, most existing image fusion methods are single-scene specific with substantial model complexity, and none of the current deep learning-based methods has the noise suppression capability. In this paper, we propose a simple and effective unified image fusion framework with noise suppression capability. The core of the proposed method is a novel self-supervised image reconstruction task. Specifically, we destroy several selected regions of an image in the frequency domain by utilizing discrete wavelet transform and reconstruct it by an encoder-decoder model. Then, in the fusion phase, the two images to be fused are first encoded by the encoder and the features are averaged and then decoded to obtain the final fused image. To keep the fusion network small, we construct the encoder-decoder model with three-layer convolutional neural network. In this way, the fusion phase is much faster than the latest works (only 0.0082s on average). We conducted experiments on four image fusion scenarios and synthesized a new experiment of fusing noisy images. Both subjective and objective evaluation demonstrate that the proposed method outperforms existing state-of-the-art approaches in these experiments.