TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning

Linhao Qu*, Shaolei Liu*, Manning Wang†, Zhijian Song†

Accepted by the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI2022)

Abstract

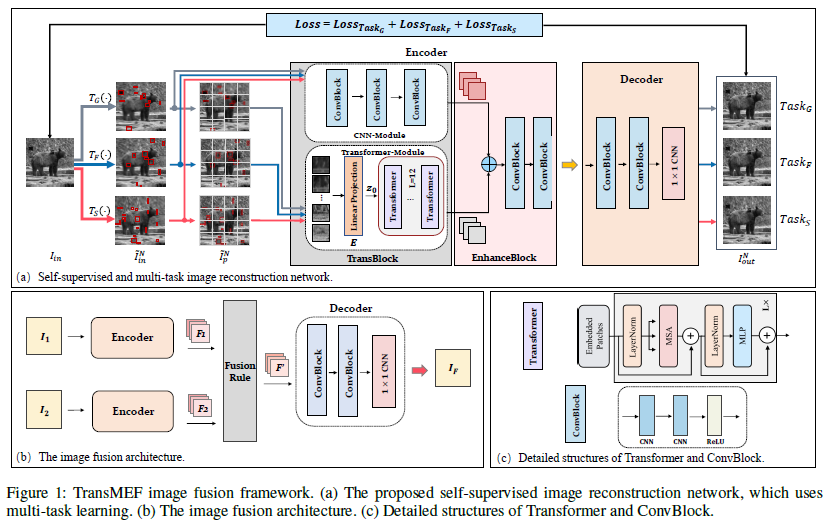

In this paper, we propose TransMEF: a transformer-based multi-exposure image fusion framework via self-supervised multi-task learning. The framework is based on an encoder-decoder network, which can be trained on large natural datasets without the need of ground truth fusion images. We design three self-supervised reconstruction tasks according to the characteristics of multi-exposure images and conduct the three tasks simultaneously through multi-task learning, so that the network can learn the characteristics of multi-exposure images and extract more generalized features. In addition, to compensate for the defect in establishing long-range dependencies in CNN-based architectures, we design an encoder that combines a CNN module with a Transformer module to enable the network to focus on both local and global information. We evaluated our method and compared it to 11 competitive traditional and deep learning-based methods on a latest released multi-exposure image fusion benchmark dataset, and our method achieves the best performance in both subjective and objective evaluations.

Paper Link: https://arxiv.org/abs/2112.01030