Local Implicit Wavelet Transformer for Arbitrary-Scale Super-Resolution

Minghong Duan, Linhao Qu, Shaolei Liu, Manning Wang†

The 35th British Machine Vision Conference 2024

Abstract

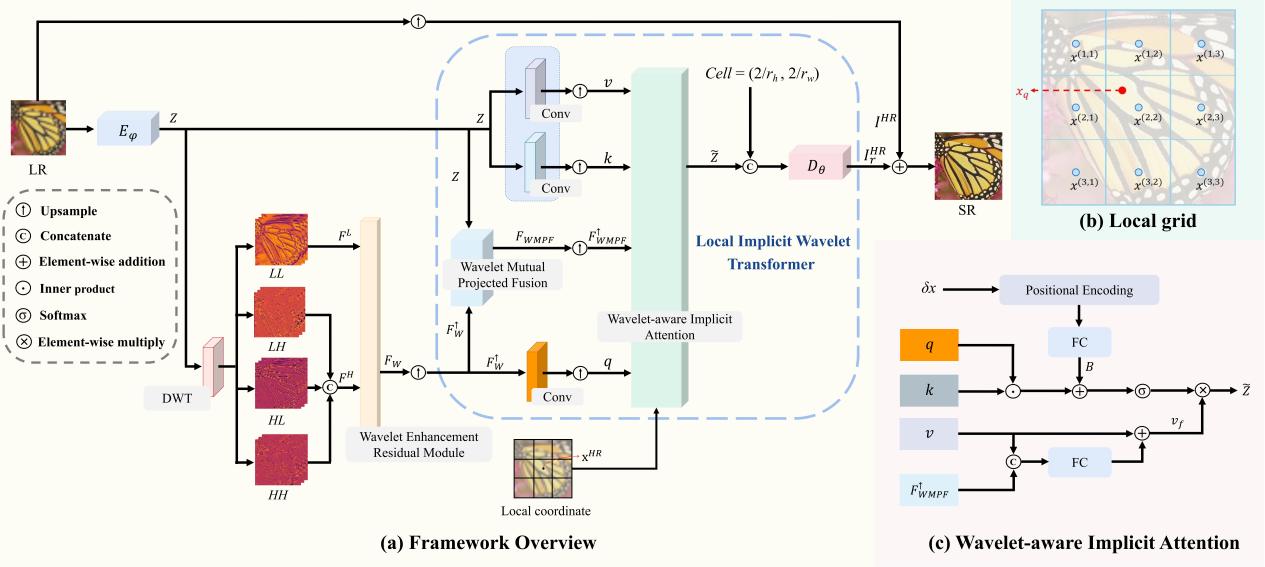

Implicit neural representations have recently demonstrated promising potential in arbitrary-scale Super-Resolution (SR) of images. Most existing methods predict the pixel in the SR image based on the queried coordinate and ensemble nearby features, overlooking the importance of incorporating high-frequency prior information in images, which results in limited performance in reconstructing high-frequency texture details in images. To address this issue, we propose the Local Implicit Wavelet Transformer (LIWT) to enhance the restoration of high-frequency texture details. Specifically, we decompose the features extracted by an encoder into four sub-bands containing different frequency information using Discrete Wavelet Transform (DWT). We then introduce the Wavelet Enhanced Residual Module (WERM) to transform these four sub-bands into high-frequency priors, followed by utilizing the Wavelet Mutual Projected Fusion (WMPF) and the Wavelet-aware Implicit Attention (WIA) to fully exploit the high-frequency prior information for recovering high-frequency details in images. We conducted extensive experiments on benchmark datasets to validate the effectiveness of LIWT. Both qualitative and quantitative results demonstrate that LIWT achieves promising performance in arbitrary-scale SR tasks, outperforming other state-of-the-art methods.