Deep Mutual Learning among Partially Labeled Datasets for Multi-Organ Segmentation

Xiaoyu Liu, Linhao Qu, Ziyue Xie, Yonghong Shi☨, Zhijian Song☨

IEEE Transactions on Medical Imaging (IF=9.8)

Abstract

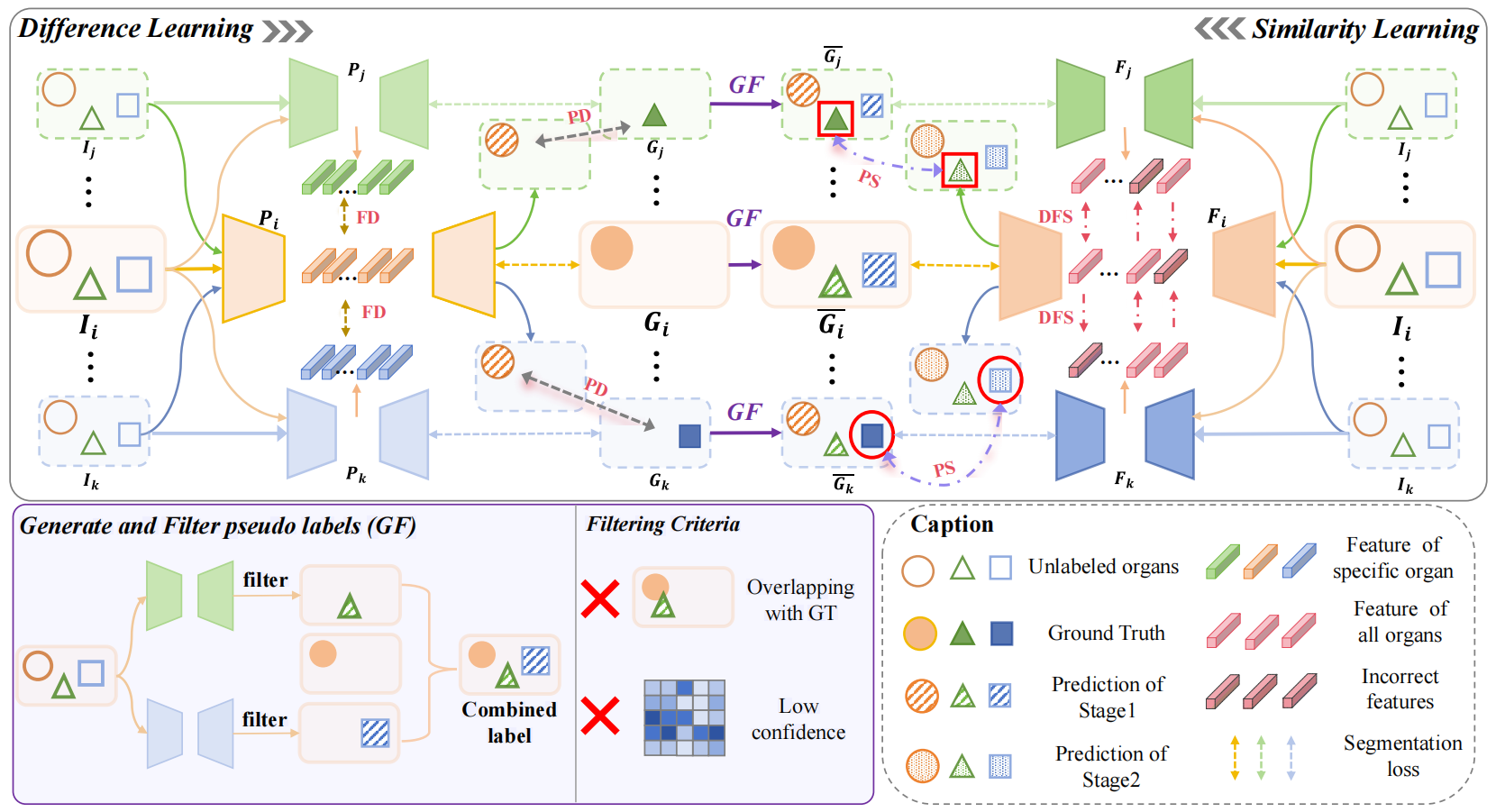

Labeling multiple organs for segmentation is a complex and time-consuming process, resulting in a scarcity of comprehensively labeled multi-organ datasets while the emergence of numerous partially labeled datasets. Current methods face three critical limitations: incomplete exploitation of available supervision; complex inference, and insufficient validation of generalization capabilities. This paper proposes a new framework based on mutual learning, aiming to improve multi-organ segmentation performance by complementing information among partially labeled datasets. Specifically, this method consists of three key components: (1) partial-organ segmentation models training with Difference Mutual Learning, (2) pseudo-label generation and filtering, and (3) full-organ segmentation models training enhanced by Similarity Mutual Learning. Difference Mutual Learning enables each partial-organ segmentation model to utilize labels and features from other datasets as complementary signals, improving cross-dataset organ detection for better pseudo labels. Similarity Mutual Learning augments each full-organ segmentation model training with two additional supervision sources: inter-dataset ground truths and dynamic reliable transferred features, significantly boosting segmentation accuracy. The model obtained by this method achieves both high accuracy and efficient inference for multi-organ segmentation. Extensive experiments conducted on nine datasets spanning the head-neck, chest, abdomen, and pelvis demonstrate that the proposed method achieves SOTA performance.