Dual-Stage Clean-Sample Selection for Incremental Noisy Label Learning

Bioengineering (IF=3.7)

Abstract

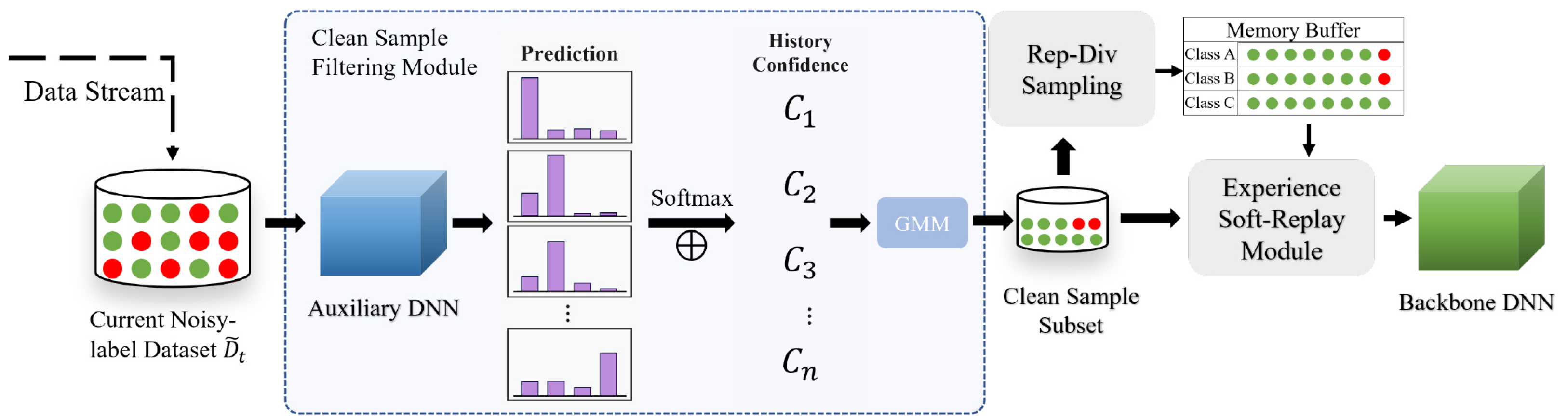

Class-incremental learning (CIL) in deep neural networks is affected by catastrophic forgetting (CF), where acquiring knowledge of new classes leads to the significant degradation of previously learned representations. This challenge is particularly severe in medical image analysis, where costly, expertise-dependent annotations frequently contain pervasive and hard-to-detect noisy labels that substantially compromise model performance. While existing approaches have predominantly addressed CF and noisy labels as separate problems, their combined effects remain largely unexplored. To address this critical gap, this paper presents a dual-stage clean-sample selection method for Incremental Noisy Label Learning (DSCNL). Our approach comprises two key components: (1) a dual-stage clean-sample selection module that identifies and leverages high-confidence samples to guide the learning of reliable representations while mitigating noise propagation during training, and (2) an experience soft-replay strategy for memory rehearsal to improve the model’s robustness and generalization in the presence of historical noisy labels. This integrated framework effectively suppresses the adverse influence of noisy labels while simultaneously alleviating catastrophic forgetting. Extensive evaluations on public medical image datasets demonstrate that DSCNL consistently outperforms state-of-the-art CIL methods across diverse classification tasks. The proposed method boosts the average accuracy by 55% and 31% compared with baseline methods on datasets with different noise levels, and achieves an average noise reduction rate of 73% under original noise conditions, highlighting its effectiveness and applicability in real-world medical imaging scenarios.