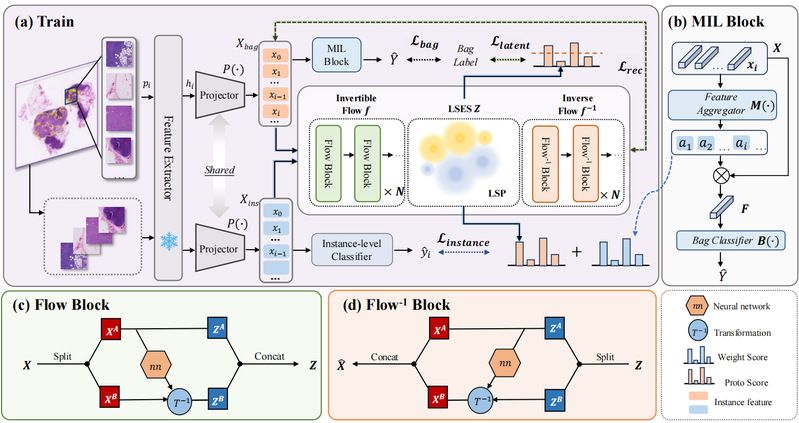

Flow-MIL: Constructing Highly-expressive Latent Feature Space For Whole Slide Image Classification Using Normalizing Flow

Yingfan Ma, Bohan An, Ao Shen, Mingzhi Yuan, Minghong Duan, Manning Wang☨

International Conference on Computer Vision (ICCV 2025)

Abstract

Whole Slide Image (WSI) classification has been widely used in pathological diagnosis and prognosis prediction, and it is commonly formulated as a weakly-supervised Multiple Instance Learning (MIL) problem because of the large size of WSIs and the difficulty of obtaining fine-grained annotations. In the MIL formulation, a WSI is treated as a bag and the patches cut from it are treated as its instances, and most existing methods first extract instance features and then aggregate them into bag feature using attention-based mechanism for bag-level prediction. These models are trained using only bag-level labels, so they often lack instance-level insights and lose detailed semantic information, which limits their bag-level classification performance and damages their ability to explore high-expressive information. In this paper, we propose Flow-MIL, which leverages normalizing flow-based Latent Semantic Embedding Space (LSES) to enhance feature representation. By mapping patches into the simple and highly-expressive latent space LSES, Flow-MIL achieves effective slide-level aggregation while preserving critical semantic information. We also introduce Gaussian Mixture Model-based Latent Semantic Prototypes (LSP) within the LSES to capture class-specific pathological distribution for each class and refine pseudo instance labels. Extensive experiments on three public WSI datasets show that Flow-MIL outperforms recent SOTA methods in both bag-level and instance-level classification and offers improved interpretability.