Reducing Domain Gap in Frequency and Spatial domain for Cross-modality Domain Adaptation on Medical Image Segmentation

Shaolei Liu*, Siqi Yin*, Linhao Qu, ManningWang†, Zhijian Song†

Thirty-Seventh AAAI Conference on Artificial Intelligence (CCF-A)

Abstract

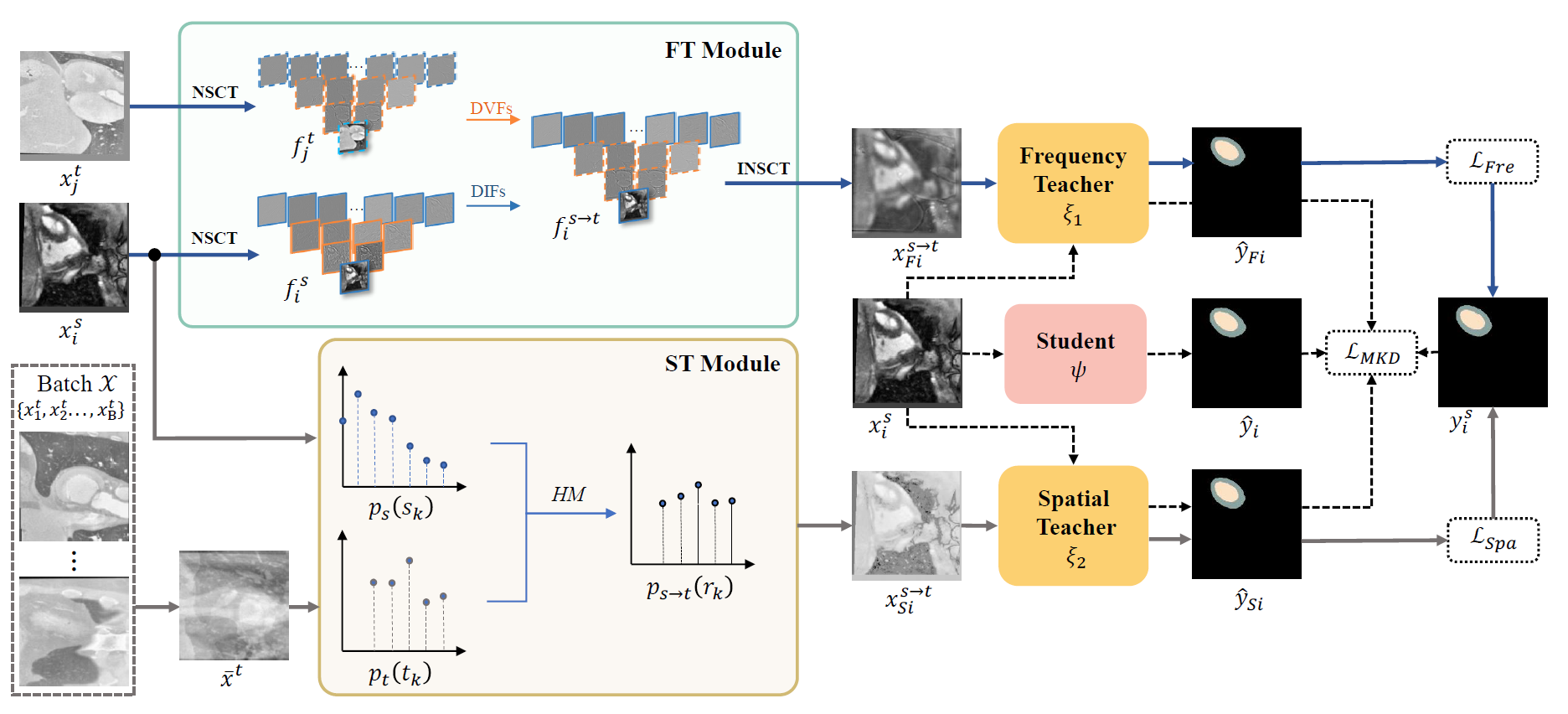

Unsupervised domain adaptation (UDA) aims to learn a model trained on source domain and performs well on unlabeled target domain. In medical image segmentation field, most existing UDA methods depend on adversarial learning to address the domain gap between different image modalities, which is ineffective due to its complicated training process. In this paper, we propose a simple yet effective UDA method based on frequency and spatial domain transfer under multi-teacher distillation framework. In the frequency domain, we first introduce non-subsampled contourlet transform for identifying domain-invariant and domain-variant frequency components (DIFs and DVFs), and then keep the DIFs unchanged while replacing the DVFs of the source domain images with that of the target domain images to narrow the domain gap. In the spatial domain, we propose a batch momentum update-based histogram matching strategy to reduce the domain-variant image style bias. Experiments on two cross-modality medical image segmentation datasets (cardiac, abdominal) show that our proposed method achieves superior performance compared to state-of-the-art methods.