Cold-start Active Learning for Image Classification

Qiuye Jin, Mingzhi Yuan, Shiman Li, Haoran Wang, Manning Wang†, Zhijian Song†

Information Sciences (IF=8.233)

Abstract

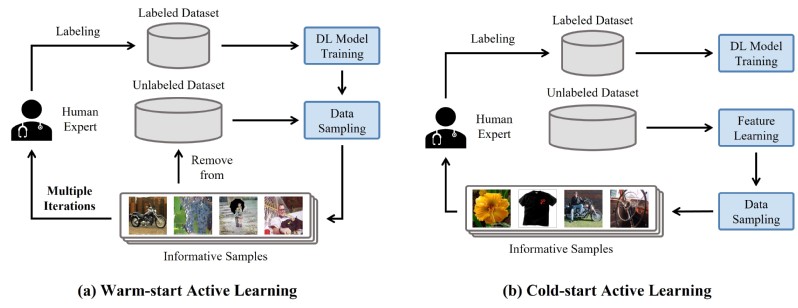

Active Learning (AL) aims to select valuable samples in an unlabeled sample pool for labeling to build a training dataset with minimal annotation cost. Traditional methods always require partially initially labeled samples to start active selection, and then query annotation of samples incrementally through several iterations. However, this scheme is not effective in the deep learning scenario. On the one hand, initially labeled sample sets for start are not always available; on the other hand, the performance of the traditional model is usually poor in the early iterations due to limited training feedback. For the first time, we propose a Cold-start AL model based on Representative sampling (CALR), which selects valuable samples without the need for an initial labeled set or the iterative feedback of the target models. Experiments on three image classification datasets, CIFAR-10, CIFAR-100 and Caltech-256, showed that CALR achieved new state-of-the-art performance of AL in cold-start settings. Especially in low annotation budget conditions, our method can achieve up to a 10\% performance increase compared to traditional methods. Further, CALR can be combined with warm-start methods to improve start-up efficiency while further breaking the performance ceiling of AL, which makes CALR have a broader application scenario.